YOLOv4-Tiny vs YOLOv8 opens the most practical question in real-time CV today: which model gives you the best trade-off between speed and accuracy?

YOLO still rules many production vision systems. Small teams and students need models that run on constrained hardware.

This post compares both models so you can pick the right one fast. Read on to learn architecture, benchmarks, deployment tips, and which model Algerian developers should use.

What is YOLOv4-Tiny vs YOLOv8 and why does it matter?

Short answer: it’s a speed vs accuracy trade.

YOLOv4-Tiny is a compact, very fast Darknet-era detector. YOLOv8 is Ultralytic’s modern family (n/s/m/l/x) focused on accuracy, multi-task support and developer UX.

Why it matters: real-time apps (traffic cams, drones, robotics) need a pragmatic choice. Which model you pick affects FPS, power draw, training time, and final accuracy.

Check : Enhancing Real-Time Object Detection: Implementing YOLOv4-Tiny with OpenCV – Around Data Science

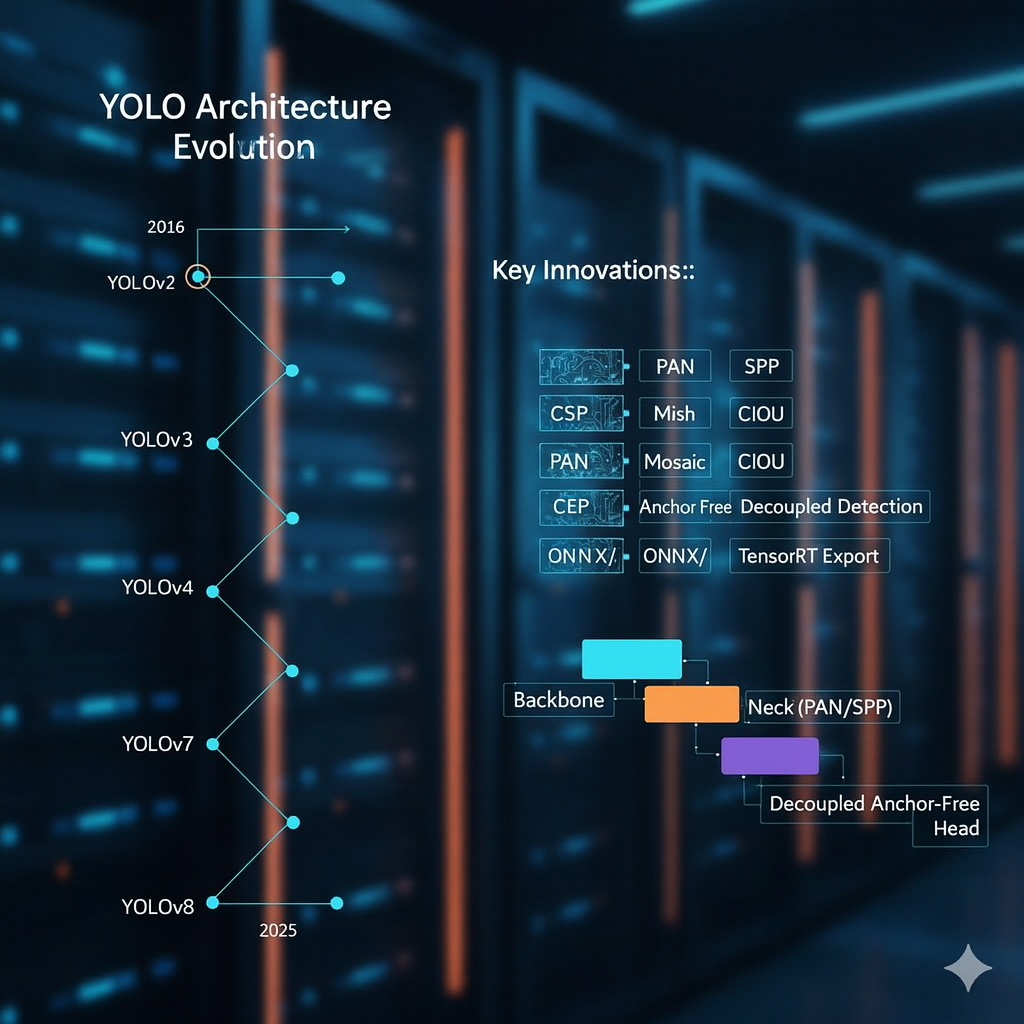

YOLO architecture evolution, a quick timeline

Start short: YOLO evolved fast.

- YOLO → YOLOv2 → YOLOv3: backbone and anchor refinements.

- YOLOv4: bag of tricks and speed/accuracy improvements.

- YOLOv7: more architectural tuning and speed wins.

- YOLOv8: new Ultralytics design, anchor-free head, end-to-end training and multi-task support.

Key innovations:

- CSP / PAN / SPP / Mish / Mosaic / CIoU and other training tricks.

- Anchor-free heads and decoupled detection in modern YOLOs.

- Better export paths (ONNX, TensorRT) and multi-task APIs in YOLOv8.

YOLOv4-Tiny overview

Architecture : simplified Darknet backbone with 2 YOLO heads. Small feature extractor designed for speed.

Speed : extremely fast on desktop GPUs and optimized runtimes (hundreds FPS with TensorRT and batch tricks). On commodity GPUs it reaches hundreds of FPS; on embedded devices it’s the practical choice.

Hardware compatibility : runs on CPU, embedded GPUs, NPUs, and OpenVINO/TensorRT pipelines. Great on older GPUs and some VPUs.

Training requirements : low memory and shorter epochs. Easy to train on small datasets. Tools: Darknet, OpenVINO, TAO, and conversion flows.

Best-use cases:

- Traffic cameras with low latency needs.

- Low-power devices (older Jetsons, many Raspberry Pi setups).

- Systems where detect rate matters more than perfect accuracy.

YOLOv8 overview

New architecture: Ultralytics’ backbone + neck + decoupled, anchor-free head. Comes in n/s/m/l/x sizes. Built for modern tooling.

Enhanced detection performance: YOLOv8 variants deliver higher COCO mAP at comparable image sizes. Example: YOLOv8n reports ~37.3 mAP on COCO in Ultralytics’ benchmarks.

Multi-task support: detection, segmentation, classification, and pose out of the box. Great for research and multi-modal pipelines.

Training speed & generalization: modern optimizer defaults, data augmentations, and easy fine-tuning. Generalizes well without heavy engineering.

Best-use cases:

- Research and high-accuracy detection.

- Production systems where high precision outweighs marginal latency.

- Multi-task apps (segmentation + detection).

Side-by-Side comparison (typical published ranges)

| Metric | YOLOv4-Tiny (typical) | YOLOv8 (example: n / s / m) |

|---|---|---|

| FPS (GPU desktop) | 200–700 (TensorRT, batch tricks). | 40–200 (n→m) depending on size; n is fastest. |

| mAP (COCO, val) | ~22–40% AP (AP50 varies by config). Model size ≈22–24 MB. | YOLOv8n ~37.3 (COCO), s/m higher (≈45–52% for larger). |

| Params | ~6–8M (varies by build). | n: ~3.5M; s: ~11M; m: ~26M; l/x larger. |

| Model size | ~22 MB (float32 weights) | YOLOv8n small (few MBs to tens MBs depending on format). |

| Dataset compatibility | COCO, Pascal, custom | COCO, custom, easy transfer learning. |

Note: benchmarks vary with image size, batch, runtime (PyTorch vs TensorRT), and quantization. Use these as practical ranges, not hard guarantees.

Benchmarking on real hardware — practical notes

CPU test: YOLOv4-Tiny runs faster than YOLOv8 on weak CPUs. YOLOv8n narrows gap but still slower. Use ONNX + OpenVINO for CPU acceleration.

GPU test: On a modern GPU (T4/A100/RTX series), YOLOv8 (small→medium) gives better mAP with acceptable FPS. YOLOv4-Tiny excels only if you need extreme FPS.

Jetson Nano / Raspberry Pi:

- Jetson Nano: YOLOv8n may reach ~5–8 FPS without heavy optimization; YOLOv4-Tiny often achieves higher FPS.

- Raspberry Pi: YOLOv8 requires quantization and accelerators (Hailo, Coral) to be practical. Seeed/Seeed Studio reports and community guides give concrete steps.

Energy consumption: smaller models + quantization = lower power. If battery life matters, favor tiny models or aggressive INT8 quantization.

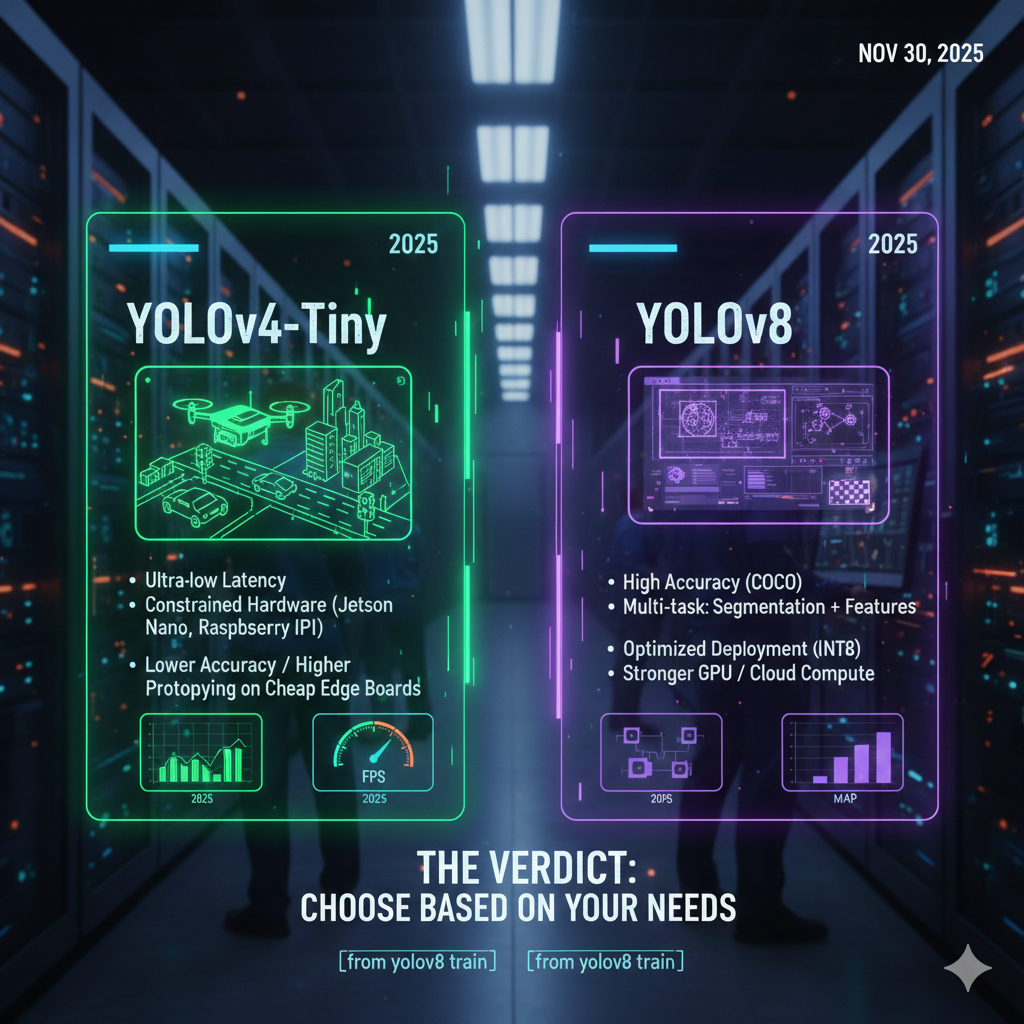

Accuracy & Speed trade-off — when each wins

When YOLOv4-Tiny wins:

- You need ultra-low latency.

- Hardware is severely constrained.

- You can accept lower accuracy for throughput.

- Prototyping on cheap edge boards.

When YOLOv8 wins:

- Require higher detection quality on COCO-like datasets.

- Need segmentation / pose alongside detection.

- You can invest in optimization (TensorRT, INT8) or run on a stronger GPU.

Which should Algerian developers use?

For students :

- Start with YOLOv8n or YOLOv8s for learning. Easy APIs and transfer learning. Use Google Colab or campus GPU.

For startups :

- Use YOLOv8 (s/m) for production when accuracy matters. Profile with TensorRT/ONNX. Consider YOLOv4-Tiny only if the product must run on very cheap hardware.

For embedded systems :

- YOLOv4-Tiny or heavily quantized YOLOv8n. Test on your target board (Jetson Nano, Raspberry Pi + accelerator). See device guides for benchmarks.

Read more : Command Executer AI Agent for Windows : Simplifying Command Execution – Around Data Science

9 bonus tips for YOLOv4-Tiny vs YOLOv8

- Quantize aggressively (INT8) for edge; validate accuracy drop.

- Use input resizing (e.g., 320/416) to speed up inference with minimal accuracy loss.

- Export to ONNX → TensorRT for Jetson/NVIDIA deployments.

- Prune or distill YOLOv8 to create a custom tiny model for specific classes.

- Use mixed precision (FP16) on GPUs to get better throughput without major accuracy loss.

Conclusion for YOLOv4-Tiny vs YOLOv8

- Choose YOLOv4-Tiny when latency and low compute are the top constraints.

- Choose YOLOv8 when you need higher accuracy, multi-task features, and a modern toolchain.

- Optimize with quantization, TensorRT, or OpenVINO depending on your target hardware.

- Benchmark on your real device, synthetic numbers rarely tell the whole story.

Quick summary:

- YOLOv4-Tiny: ultra-fast, tiny footprint, easier edge deployment.

- YOLOv8: modern, accurate, multi-task, better long-term support.

Start your journey to become a data-savvy professional in Algeria.

👉 Subscribe to our newsletter, follow Around Data Science on LinkedIn, and join the community on Discord.

Downloads & datasets (official ):

- YOLOv8 (Ultralytics): docs & models – Ultralytics GitHub / Docs.

- YOLOv4 / YOLOv4-Tiny (Darknet / AlexeyAB): GitHub and weights.

- COCO dataset: standard benchmark for detection.

Last sentence: Choose wisely based on constraints and test — YOLOv4-Tiny vs YOLOv8.

0 Comments